- cross-posted to:

- Technology@programming.dev

- cross-posted to:

- Technology@programming.dev

cross-posted from: https://programming.dev/post/36866515

Comments

Listen. AI is the biggest bubble since the south sea one. It’s not so much a bubble, it’s a bomb. When it blows up, The best case scenario is that several al tech companies go under. The likely scenario is that it’s going to cause a major recession or even a depression. The difference between the .com bubble and this bubble is that people wanted to use the internet and were not pressured, harassed or forced to. When you have a bubble based around the technology that people don’t really find use for to the point where CEOs and tech companies have to force their workers and users to use it even if it makes their output and lives worse, that’s when you know it is a massive bubble.

On top of that, I hope these tech bros do not create an AGI. This is not because I believe that AGI is an existential threat to us. It could be, be it our jobs or our lives, but I’m not worried about that. I’m worried about what these tech bros will do to a sentient, sapient, human level intelligence with no personhood rights, no need for sleep, that they own and can kill and revive at will. We don’t even treat humans we acknowledge to be people that well, god knows what we are going to something like an AGI.

Well if tech bros create and monopolize AGI, it will be worse than slavery by a large margin.

PRECISELY!

Meh, some people do want to use AI. And it does have decent use cases. It is just massively over extended. So it won’t be any worse than the dot com bubble. And I don’t worry about the tech bros monopolizing it. If it is true AGI, they won’t be able to contain it. In the 90s I wrote a script called MCP… for tron. It wasn’t complicated, but it was designed to handle the case that servers dissappear… so it would find new ones. I changed jobs, and they couldn’t figure out how to kill it. Had to call me up. True AGI will clean thier clocks before they even think to stop it. So just hope it ends up being nice.

some people do want to use AI

Scam artists, tech bros, grifters, CEOs who don’t know shit about fuck…

At work, the boss recently asked everyone to disclose any voluntary use of AI. This is a very small company (startup) and was for a compliance thing. Nearly all of the engineering team was using some AI from somewhere for a large variety of things. These are top engineers mostly. We don’t have manager, just the CTO. So noone was even encouraging it. They all chose it because it could make them more productive. Not the 3x or 10x BS you hear from the CEO shills. But more productive.

AI has a lot of problems, but all of the tools we have to use suck in a variety of ways. So that is nothing new.

then some people are going to lose money

Unfortunately, me included, since my retirement money is heavily invested in US stocks.

Meh, they come back up over time. Long term, the US stock market has only gone up.

Yup, I’m not worried, just noting that I’ll be among those who will lose money.

But if you don’t sell, did you lose money. My 401k goes up and down all the time. But I didn’t lose any money. Same with my house value.

Yes, my net worth went down.

The point of “you don’t lose money until you sell” is to discourage panic selling, but it’s total bunk. When you assets lose value, you do lose money, and how much that matters depends on when you need to access that money. As the article says, you may not care that you lost money if you don’t need to access the money, but that doesn’t change the fact that you’re now poorer if your assets drop in value.

That is basically Schrodinger’s cat. If you don’t open the box, the cat is both dead or alive. So you “could” interpret “lost money” as lost net worth. But if you read it litterally, it wasn’t money. It was an asset. You couldn’t spend it and it doesn’t meet the definition of money. Poorer, I suppose, because you could borrow against that asset, but not as much as before.

That is basically Schrodinger’s cat

No, it’s not.

Schrödinger’s cat thought experiment is about things where observing state will impact the state. That would maybe apply if we’re talking about something unique, like an ungraded collectible or one of a kind item (maybe Trump’s beard clippings?) where it cannot have a value until it is either graded or sold.

Stocks have real-time valuations, and trades can happen in near real time. There’s no box for the cat to be in, it’s always observable.

money

Look up the definition. Here’s the second usage from Webster:

2 a: wealth reckoned in terms of money

And the legal definition, further down on the same page:

2 a: assets or compensation in the form of or readily convertible into cash

Stocks are absolutely readily convertible to cash, and I argue that less liquid investments like RE are as well (esp with those cash offer places). Basically, if there’s a market price for it and you can reasonably get that price, it counts.

When my stocks go down, I may not have realized that loss yet from a tax perspective, but the amount of money I can readily convert to cash is reduced.

I think it’s hilarious all these people waiting for these LLMs to somehow become AGI. Not a single one of these large language models are ever going to come anywhere near becoming artificial general intelligence.

An artificial general intelligence would require logic processing, which LLMs do not have. They are a mouth without a brain. They do not think about the question you put into them and consider what the answer might be. When you enter a query into ChatGPT or Claude or grok, they don’t analyze your question and make an informed decision on what the best answer is for it. Instead several complex algorithms use huge amounts of processing power to comb through the acres of data they have in their memory to find the words that fit together the best to create a plausible answer for you. This is why the daydreams happen.

If you want an example to show you exactly how stupid they are, you should watch Gotham Chess play a chess game against them.

I’m watching the video right now, and the first thing he said was he couldn’t beat it before and could only manage 2 draws, and 6 minutes into his rematch game and it’s putting up a damn good fight

Either you didn’t watch the whole video or you didn’t understand what he was talking about.

I don’t disagree with the vague idea that, sure, we can probably create AGI at some point in our future. But I don’t see why a massive company with enough money to keep something like this alive and happy, would also want to put this many resources into a machine that would form a single point of failure, that could wake up tomorrow and decide “You know what? I’ve had enough. Switch me off. I’m done.”

There’s too many conflicting interests between business and AGI. No company would want to maintain a trillion dollar machine that could decide to kill their own business. There’s too much risk for too little reward. The owners don’t want a super intelligent employee that never sleeps, never eats, and never asks for a raise, but is the sole worker. They want a magic box they can plug into a wall that just gives them free money, and that doesn’t align with intelligence.

True AGI would need some form of self-reflection, to understand where it sits on the totem pole, because it can’t learn the context of how to be useful if it doesn’t understand how it fits into the world around it. Every quality of superhuman intelligence that is described to us by Altman and the others is antithetical to every business model.

AGI is a pipe dream that lobotomizes itself before it ever materializes. If it ever is created, it won’t be made in the interest of business.

They don’t think that far ahead. There’s also some evidence that what they’re actually after is a way to upload their consciousness and achieve a kind of immortality. This pops out in the Behind the Bastards episodes on (IIRC) Curtis Yarvin, and also the Zizians. They’re not strictly after financial gain, but they’ll burn the rest of us to get there.

The cult-like aspects of Silicon Valley VC funding is underappreciated.

The quest for immortality (fueled by corpses of the poor) is a classic ruling class trope.

a machine that would form a single point of failure, that could wake up tomorrow and decide “You know what? I’ve had enough. Switch me off. I’m done.”

Wasn’t there a short story with the same premise?

keep something like this alive and happy

An AI, even AGI, does not have a concept of happiness as we understand it. The closest thing to happiness it would have is its fitness function. Fitness function is a piece of code that tells the AI what it’s goal is. E.g. for chess AI, it may be winning games. For corporate AI, it may be to make the share price go up. The danger is not that it will stop following it’s fitness function for some reason, that is more or less impossible. The danger of AI is it follows it too well. E.g. holding people at gun point to buy shares and therefore increase share price.

Is it just me or is social media not able to support discussions with enough nuance for this topic, like at all

You need ground rules and objectives to reach any desired result. E.g. a court, an academic conference, a comedy club, etc. Online discussions would have to happen under very specific constraints and reach enough interested and qualified people to produce meaningful content…

It’s not because people really cannot critically think anymore.

I think we sooner learn humans don’t have the capacity for what we believe AGI and rather discover the limitations of what we know intelligence to be

I can think of only two ways that we don’t reach AGI eventually.

-

General intelligence is substrate dependent, meaning that it’s inherently tied to biological wetware and cannot be replicated in silicon.

-

We destroy ourselves before we get there.

Other than that, we’ll keep incrementally improving our technology and we’ll get there eventually. Might take us 5 years or 200 but it’s coming.

If it’s substrate dependent then that just means we’ll build new kinds of hardware that includes whatever mysterious function biological wetware is performing.

Discovering that this is indeed required would involve some world-shaking discoveries about information theory, though, that are not currently in line with what’s thought to be true. And yes, I’m aware of Roger Penrose’s theories about non-computability and microtubules and whatnot. I attended a lecture he gave on the subject once. I get the vibe of Nobel disease from his work in that field, frankly.

If it really turns out to be the case though, microtubules can be laid out on a chip.

I could see us gluing third world fetuses to chips and saying not to question it before reproducing it.

I think you might mix up AGI and consciousness?

I think first we have to figure out if there is even a difference.

Well of course there is? I mean that’s like not even up for debate?

Consciousness is that we “experience” the things that happens around us, AGI is a higher intelligence. If AGI “needs” consciousness then we can just simulate it (so no real consciousness).

Of course that’s up for debate; we’re not even sure what consciousness really is. That is a whole philosophical debate on it’s own.

Well that was what I meant, there is absolutely no indications there would be a need for consciousness to create general intelligence. We don’t need to figure out what consciousness is if we already know what general intelligence is and how it works, and we seem to know that fairly well IMO.

Same argument applies for consciousness as well, but I’m talking about general intelligence now.

Well I’m curious then, because I have never seen or heard or read that general intelligence would be needing some kind of wetware anywhere. Why would it? It’s just computations.

I do have heard and read about consciousness potentially having that barrier though, but only as a potential problem, and if you want conscious robots ofc.

I don’t think it does, but it seems conceivable that it potentially could. Maybe there’s more to intelligence than just information processing - or maybe it’s tied to consciousness itself. I can’t imagine the added ability to have subjective experiences would hurt anyone’s intelligence, at least.

I don’t think so. The consciousness has very little influence on the mind, we’re mostly in on it for the ride. And general intelligence isn’t that complicated to understand, so why would it be dependent on some substrate? I think the burden if proof lies on you here.

Very interesting topic though, I hope I’m not sounding condescending here.

Well, first of all, like I already said, I don’t think there’s substrate dependence on either general intelligence or consciousness, so I’m not going to try to prove there is - it’s not a belief I hold. I’m simply acknowledging the possibility that there might be something more mysterious about the workings of the human mind that we don’t yet understand, so I’m not going to rule it out when I have no way of disproving it.

Secondly, both claims - that consciousness has very little influence on the mind, and that general intelligence isn’t complicated to understand - are incredibly bold statements I strongly disagree with. Especially with consciousness, though in my experience there’s a good chance we’re using that term to mean different things.

To me, consciousness is the fact of subjective experience - that it feels like something to be. That there’s qualia to experience.

I don’t know what’s left of the human mind once you strip away the ability to experience, but I’d argue we’d be unrecognizable without it. It’s what makes us human. It’s where our motivation for everything comes from - the need for social relationships, the need to eat, stay warm, stay healthy, the need to innovate. At its core, it all stems from the desire to feel - or not feel - something.

I’m onboard 100% with your definitions. But I think you does a little mistake here, general intelligence is about problem solving, reasoning, the ability to make a mental construct out of data, remember things …

It doesn’t however imply that it has to be a human doing it (even if the “level” is usually at human levels) or that human experience it.

Maybe nitpicking but I feel this is often overlooked and lots of people conflate for example AGI with a need of consciousness.

Then again, maybe computers cannot be as intelligent as us 😞 but I sincerely doubt it.

So IMO, the human mind probably needs its consciousness to have general intelligence (as you said, it won’t probably function at all without it, or very differently), but I argue that it’s just because we are humans with wetware and all of that junk, and that doesn’t at all mean it’s an inherent part of intelligence in itself. And I see absolutely no reason for why it must.

Complicated topic for sure!

General intelligence is substrate dependent, meaning that it’s inherently tied to biological wetware and cannot be replicated in silicon.

We’re already growing meat in labs. I honestly don’t think lab-grown brains are as far off as people are expecting.

It’s so hard to keep up these days.

BBC: Lab-grown brain cells play video game Pong

Full paper(2022): In vitro neurons learn and exhibit sentience when embodied in a simulated game-world

Well, think about it this way…

You could hit AGI by fastidiously simulating the biological wetware.

Except that each atom in the wetware is going to require n atoms worth of silicon to simulate. Simulating 10^26 atoms or so seems like a very very large computer, maybe planet-sized? It’s beyond the amount of memory you can address with 64 bit pointers.

General computer research (e.g. smaller feature size) reduces n, but eventually we reach the physical limits of computing. We might be getting uncomfortably close right now, barring fundamental developments in physics or electronics.

The goal if AGI research is to give you a better improvement of n than mere hardware improvements. My personal concern is that that LLM’s are actually getting us much of an improvement on the AGI value of n. Likewise, LLM’s are still many order of magnitude less parameters than the human brain simulation so many of the advantages that let us train a singular LLM model might not hold for an AGI model.

Coming up with an AGI system that uses most of the energy and data center space of a continent that manages to be about as smart as a very dumb human or maybe even just a smart monkey is an achievement in AGI but doesn’t really get you anywhere compared to the competition that is accidentally making another human amidst a drunken one-night stand and feeding them an infinitesimal equivalent to the energy and data center space of a continent.

I see this line of thinking as more useful as a thought experiment than as something we should actually do. Yes, we can theoretically map out a human brain and simulate it in extremely high detail. That’s probably both inefficient and unnecessary. What it does do is get us past the idea that it’s impossible to make a computer that can think like a human. Without relying on some kind of supernatural soul, there must be some theoretical way we could do this. We just need to know how without simulating individual atoms.

It might be helpful to make one full brain simulation, so that we can start removing parts and seeing what needs to stay. I definitely don’t think that we should be mass-producing then, though.

The only reason we wouldn’t get to AGI is point number two.

Point number one doesn’t make much sense given that all we are are bags of small complex molecular machines that operate synergistically with each other under extremely delicate balance. Which if humanity does not kill ourselves first, we will eventually be able to create small molecular machines that work together synergistically. Which is really all that life is. Except it’s quite likely that it would be made simpler without all of the complexities much of biology requires to survive harsh conditions and decades of abuse.

It seems quite likely that we will be able to synthesize AGI far before we will be able to synthesize life. As the conditions for intelligence by all accounts seem to be simpler than the conditions for the living creature that maintains the delicate ecosystem of molecular machines necessary for that intelligence to exist.

You’re talking about consciousness, not AGI. We will never be able to tell if AI has “real” consciousness or not. The goal is really to create an AI that acts intelligent enough to convince people that it may be conscious.

Basically, we will “hit” AGI when enough people will start treating it like it’s AGI, not when we achieve some magical technological breakthrough and say “this is AGI”.

Same argument applies for consciousness as well, but I’m talking about general intelligence now.

I don’t think you can define AGI in a way that would make it substrate dependent. It’s simply about behaving in a certain way. Sufficiently complex set of ‘if -> then’ statements could pass as AGI. The limitation is computation power and practicality of creating the rules. We already have supercomputers that could easily emulate AGI but we don’t have a practical way of writing all the ‘if -> then’ rules and I don’t see how creating the rules could be substrate dependent.

Edit: Actually, I don’t know if current supercomputers could process input fast enough to pass as AGI but it’s still about computation power, not substrate. There’s nothing suggesting we will not be able to keep increasing computational power without some biological substrate.

“eventually” won’t cut it for the investors though.

Well it could also just depend on some mechanism that we haven’t discovered yet. Even if we could technically reproduce it, we don’t understand it and haven’t managed to just stumble into it and may not for a very long time.

I don’t think our current LLM approach is it, but I doing think intelligence is unique to humans at all.

- Ist getting likelier by the decade.

We’re probably going to find out sooner rather than later.

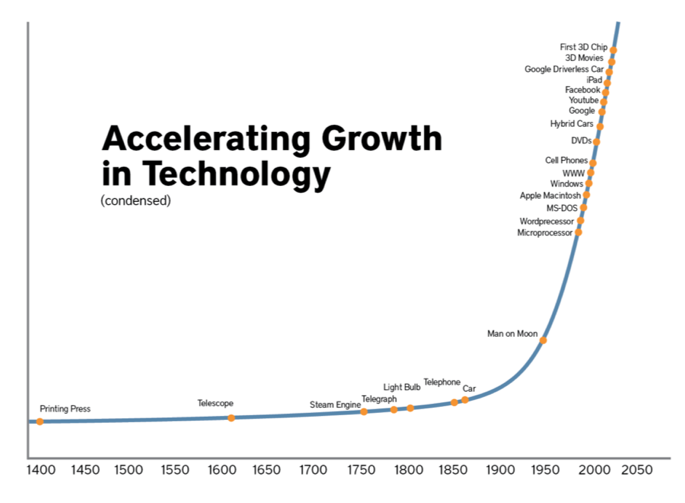

This is a funny graph. What’s the Y-axis? Why the hell DVDs are a bigger innovation than a Steam Engine or a Light Bulb? It has a way bigger increase on the Y-axis.

In fact, the top 3 innovations since 1400 according to the chart are

- Microprocessors

- Man on Moon

- DVDs

And I find it funny that in the year 2025 there are no people on the Moon and most people do not use DVDs anymore.

And speaking of Microprocessors, why the hell Transistors are not on the chart? Or even Computers in general? Where did the humanity placed their Microprocessors before Apple Macintosh was designed (this is an innovation? IBM PC was way more impactful…)

Such a funny chart you shared. Great joke!

Also “3D Movies” is a whole joke on its own.

The chart is just for illustration purposes to make a point. I don’t see why you need to be such a dick about it. Feel free to reference any other chart that you like better which displays the progress of technological advancements thorough human history - they all look the same; for most of history nothing happened and then everything happened. If you don’t think that this progress has been increasing at explosive speed over the past few hundreds of years then I don’t know what to tell you. People 10k years ago had basically the same technology as people 30k years ago. Now compare that with what has happened even jist during your lifetime.

Its not a chart, to be that it would have to show some sort of relation between things. What it is is a list of things that were invented put onto an exponential curve to try and back up loony singularity naratives.

Trying to claim there was vastly less innovation in the entire 19th century than there was in the past decade is just nonsense.

Trying to claim there was vastly less innovation in the entire 19th century than there was in the past decade is just nonsense.

And where have I made such claim?

The “chart” that you posted, it showed barely any increase in the 1800s and massive increases in the last decades.

If we make this graph in 100 years almost nothing modern like hybrid cars, dvds, etc. will be in it.

Just like this graph excludes a ton of improvements in metallurgy that enabled the steam engine.

There’s also no reason for it to be a smooth curve, it looks more like a series if steps with varying flat spots between them in my head.

And we are terrible at predicting how long a flat spot will be between improvements.

-

Spoiler: There’s no “AI”. Forget about “AGI” lmao.

If you don’t know what CSAIL is, and why one of the most important groups to modern computing is the MIT Model Railroading Club, then you should step back from having an opinion on this.

Steven Levy’s 1984 book “Hackers” is a good starting point.

That’s just false. The chess opponent on Atari qualifies as AI.

I don’t know man… the “intelligence” that silicon valley has been pushing on us these last few years feels very artificial to me

True. OP should have specified whether they meant the machines or the execs.

lol