But but but, Daddy CEO said that RTO combined with Gen AI would mean continued, infinite growth and that we would all prosper, whether corposerf or customer!

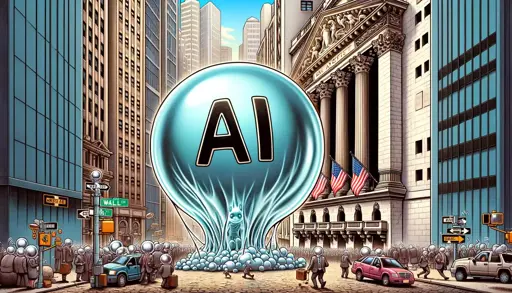

Thank fucking christ. Now hopefully the AI bubble will burst along with it and I don’t have to listen to techbros drone on about how it’s going to replace everything which is definitely something you do not want to happen in a world where we sell our ability to work in exchange for money, goods and services.

Amen to that 🙏

I had a shipment from Amazon recently with an order that was supposed to include 3 items but actually only had 2 of them. Amazon marked all 3 of my items as delivered. So I got on the web site to report it and there is no longer any direct way to report it. I ended up having to go thru 2 separate chatbots to get a replacement sent. Ended up wasting 10 minutes to report a problem that should have taken 10 seconds.

Sounds like everything’s working as intended from Amazon’s perspective.

I use it almost every day, and most of those days, it says something incorrect. That’s okay for my purposes because I can plainly see that it’s incorrect. I’m using it as an assistant, and I’m the one who is deciding whether to take its not-always-reliable advice.

I would HARDLY contemplate turning it loose to handle things unsupervised. It just isn’t that good, or even close.

These CEOs and others who are trying to replace CSRs are caught up in the hype from Eric Schmidt and others who proclaim “no programmers in 4 months” and similar. Well, he said that about 2 months ago and, yeah, nah. Nah.

If that day comes, it won’t be soon, and it’ll take many, many small, hard-won advancements. As they say, there is no free lunch in AI.

It is important to understand that most of the job of software development is not making the code work. That’s the easy part.

There are two hard parts::

-Making code that is easy to understand, modify as necessary, and repair when problems are found.

-Interpreting what customers are asking for. Customers usually don’t have the vocabulary and knowledge of the inside of a program that they would need to have to articulate exactly what they want.

In order for AI to replace programmers, customers will have to start accurately describing what they want the software to do, and AI will have to start making code that is easy for humans to read and modify.

This means that good programmers’ jobs are generally safe from AI, and probably will be for a long time. Bad programmers and people who are around just to fill in boilerplates are probably not going to stick around, but the people who actually have skill in those tougher parts will be AOK.

A good systems analyst can effectively translate user requirements into accurate statements, does not need to be a programmer. Good systems analysts are generally more adept in asking clarifying questions, challenging assumptions and sussing out needs. Good programmers will still be needed but their time is wasted gathering requirements.

Most places don’t have all good system analysts.

True.

For this to make sense AI has to replace product-oriented roles too. Some C-level person says “make products go brrrrrr” and it does everything

What is a systems analyst?

I never worked in a big enough software team to have any distinction other than “works on code” and “does sales work”.

The field I was in was all small places that were very specialized in what they worked on.

When I ran my own company, it was just me. I did everything that the company needed to take are of.

Systems analyst is like a programmer analyst without the coding. I agree, in my experience small shops were more likely to have just programmer analysts. Often also responsible for hardware as well.

If it’s just you I hope you didn’t need a systems analyst to gather requirements and then work with the programmer to implement them. If you did, might need another kind of analysis. ;)

And a lot of burnt carbon to get there :(

Have you ever played a 3D game

It’s always funny how companies who want to adopt some new flashy tech never listen to specialists who understand if something is even worth a single cent, and they always fell on their stupid face.

So providing NO assistance to customers turned out to be a bad idea?

THE MOST UNPREDICTABLE OUTCOME IN THE HISTORY OF CUSTOMER SERVICE!

from what I’ve seen so far i think i can safely the only thing AI can truly replace is CEOs.

I was thinking about this the other day and don’t think it would happen any time soon. The people who put the CEO in charge (usually the board members) want someone who will make decisions (that the board has a say in) but also someone to hold accountable for when those decisions don’t realize profits.

AI is unaccountable in any real sense of the word.

AI is unaccountable in any real sense of the word.

Doesn’t stop companies from trying to deflect accountability onto AI. Citations Needed recently did an episode all about this: https://citationsneeded.medium.com/episode-217-a-i-mysticism-as-responsibility-evasion-pr-tactic-7bd7f56eeaaa

I suppose that makes perfect sense. A corporation is an accountability sink for owners, board members and executives, so why not also make AI accountable?

I was thinking more along the lines of the “human in the loop” model for AI where one human is responsible for all the stuff that AI gets wrong despite it physically not being possible to review every line of code an AI produces.

You’ve heard of Early Adopters

Now get ready for Early Abandoners.

If the customer support of my ISP doesn’t even know what CGNAT is, but AI knows, I am actually troubled whether this is a good move or not.

See thats just it, the AI doesn’t know either it just repeats things which approximate those that have been said before.

If it has any power to make changes to your account then its going to be mistakenly turning peoples services on or off, leaking details, etc.

it just repeats things which approximate those that have been said before.

That’s not correct and over simplifies how LLMs work. I agree with the spirit of what you’re saying though.

You’re wrong but I’m glad we agree.

I’m not wrong. There’s mountains of research demonstrating that LLMs encode contextual relationships between words during training.

There’s so much more happening beyond “predicting the next word”. This is one of those unfortunate “dumbing down the science communication” things. It was said once and now it’s just repeated non-stop.

If you really want a better understanding, watch this video:

And before your next response starts with “but Apple…”

Their paper has had many holes poked into it already. Also, it’s not a coincidence their paper released just before their WWDC event which had almost zero AI stuff in it. They flopped so hard on AI that they even have class action lawsuits against them for their false advertising. In fact, it turns out that a lot of their AI demos from last year were completely fabricated and didn’t exist as a product when they announced them. Even some top Apple people only learned of those features during the announcements.

Apple’s paper on LLMs is completely biased in their favour.

Defining contextual relationship between words sounds like predicting the next word in a set, mate.

Only because it is.

Not at all. It’s not “how likely is the next word to be X”. That wouldn’t be context.

I’m guessing you didn’t watch the video.

AI is worse for the company than outsourcing overseas to underpaid call centers. That is how bad AI is at replacing people right now.

Can we get our customer service off of “X former know as Twitter” too while we’re at it?

And discord. For fucks sake I hate when a project has replaced a forum with discord. They are not the same thing.

Sure, once it is no longer one of the most popular social media platforms.

If I have to deal with AI for customer support then I will find a different company that offers actual customer support.

I hope they all go under. I’ve no sympathy for them and I wish nothing but the worst for them.

I will note that AI customer service could be an improvement. Customer service helpline jobs are one of the worst jobs to get paid peanuts to do.

Of course, my preference is to upgrade the crap voice recognition system with an AI voice recognition system, which is way better at understanding words. The help desk jockeys can stay, as they do the real work.

My company gets a lot of incoming chats from customers (and potential customers)

The challenge of this side of the business is 98% of the questions asked over chat are already answered on the very website that person started the chat from. Like it’s all written right there!

So real human chat agents are reduced to copy paste monkeys in most interactions.

But here’s the rub. The people asking the questions fit into one of two groups: not smart or patient enough to read (unfortunate waste of our resources) or they are checking whether our business has real humans and is responsive before they buy.

It’s that latter group for whom we must keep red blooded, educated and service minded humans on the job to respond, and this is where small companies can really kick ass next to behemoths like google who bring in over $1m per employee but still can’t seem to afford a phone line to support your account with them.

Replace all the customer facing employees with chimpanzees with webcams that say in sign language: read what’s on the website. Whenever someone calls in or opens a chat, they’re connected with a chimp. Be sure to also include a guide to ASL on the company website. I guarantee sales will go up