Apple is the best on privacy though right?

Tell me you didn’t read the article without telling me you didn’t read the article.

The entire thing is explaining how they are upholding privacy to do this training.

- It’s opt-in only (if you don’t choose to share analytics, nothing is collected).

- They use differential privacy (adding noise so they get trends, not individual data).

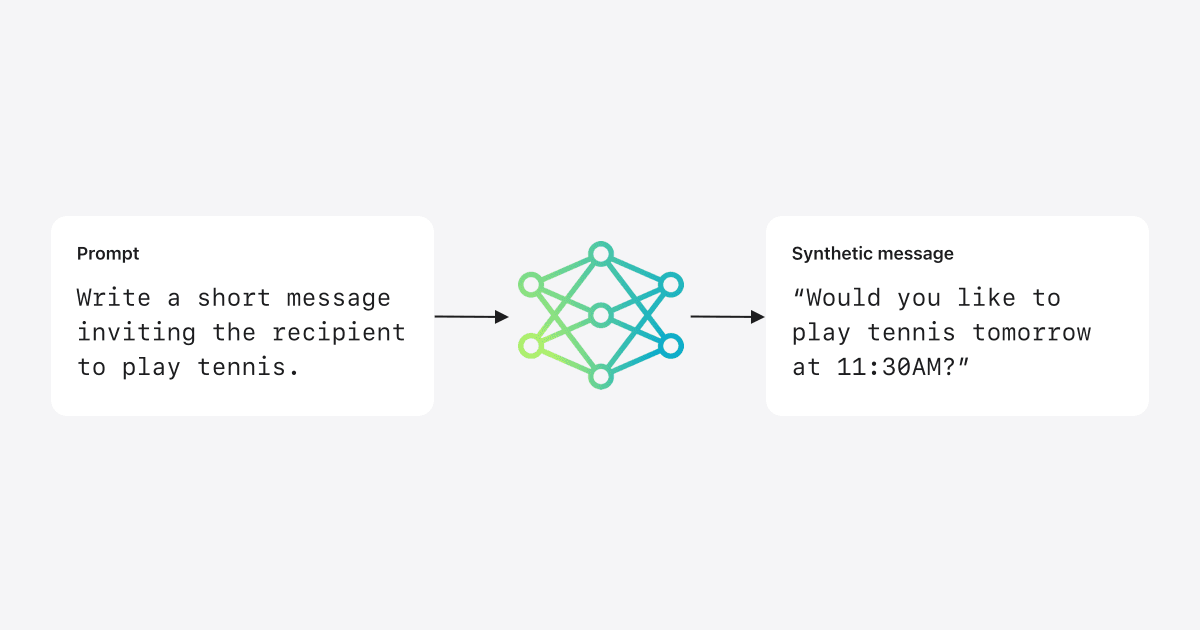

- They developed a new method to train on text patterns without collecting actual messages or emails from devices. (link to research on arXiv)

Right. There’s plenty to criticise Apple for, both in general and for chasing the AI trend, but looking at it purely in terms of user privacy within AI features they’re miles ahead of the competition.

I had scanned through it, and it looked like the exact same stuff that Google and Microsoft say. Paraphrasing: “we value your privacy” “we’re de-identifying your data” “the processing occurs on-device”…

Apple probably is better on privacy than other big tech corpos, but it’s a race to the bottom, and they’re definitely participating in the race.

To be honest, it’s important to the point it should be in the title since privacy is the selling point for apple.

Yeah, that’s on OP. The article is actually titled, “Understanding Aggregate Trends for Apple Intelligence Using Differential Privacy.”

Yes they have said so themselves

Was working on a simulator and needed random interaction data. Statistical randomness didn’t capture likely scenarios (bell curves and all that). Switched to LLM synthetic data generation. Seemed better, but wait… seemed off 🤔. Checked it for clustering and entropy vs human data. JFC. Waaaaaay off.

Lesson: synthetic data for training is a Bad Idea. There are no shortcuts. Humans are lovely and messy.

Holy crap , this is really intrusive. It’s opt in, but who would opt in to this harvesting at all?

It would be nice if they actually fixed the stability issues in Apple Intelligence before they start adding more layers of slop to it. Writing tools summarization has been broken off and on since it launched.